When we set out to share LiveSwitch with the world, we had one vision in mind: to provide WebRTC developers with the most flexible live video solution for the widest range of use cases.

In addition to regular LiveSwitch SDK releases for the incredible range of platforms and languages we support, we have also now made several open-source developer tools available to the public. This blog post will discuss everything you need to know about LiveSwitch Mux, LiveSwitch Connect, and LiveSwitch Cloud Beta Client SDKs and how to get started with them this year.

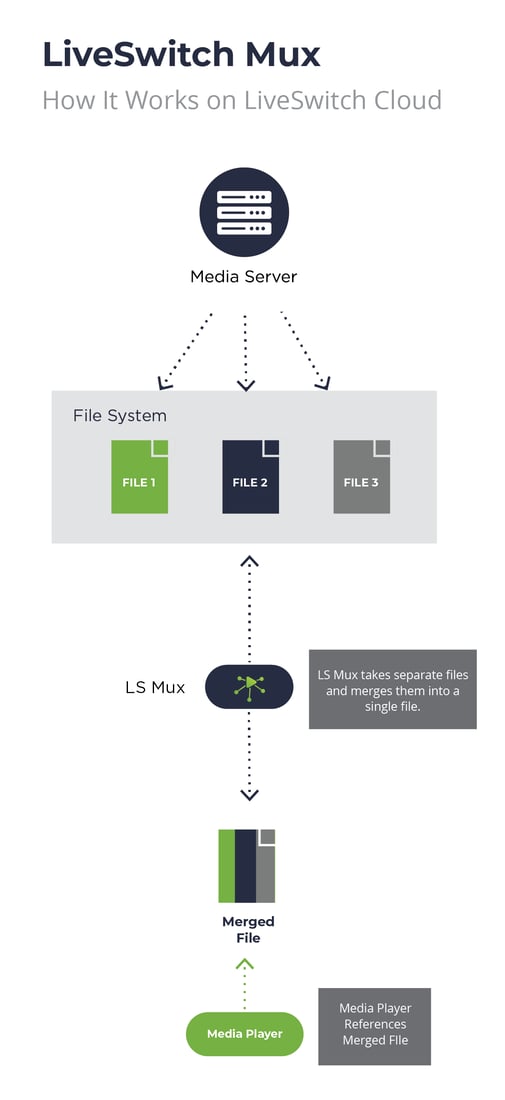

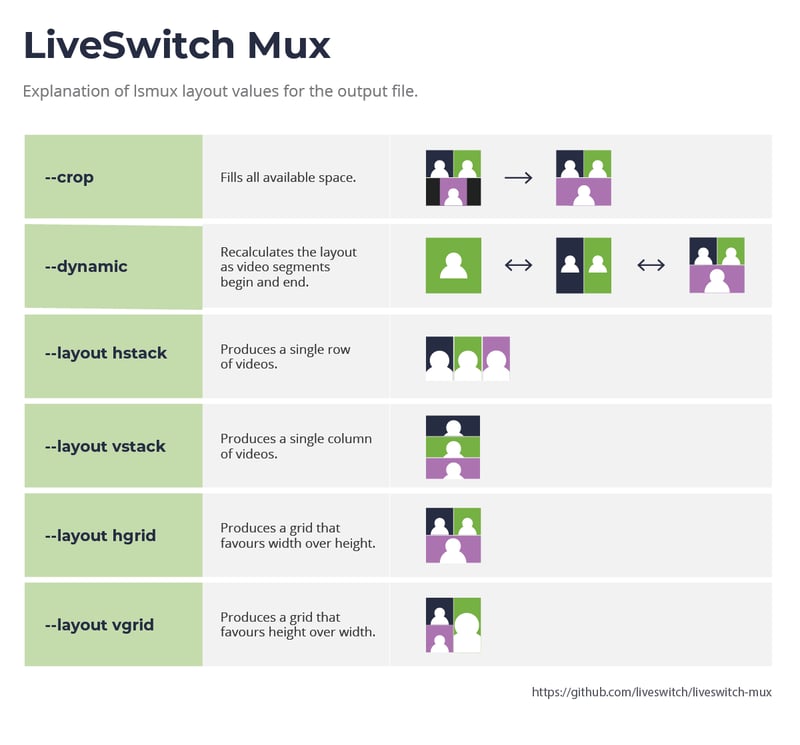

1. LiveSwitch Mux

LiveSwitch Mux is a media muxing tool that combines individual raw streams in a Media Server's file system into a single file (eg. MP4, MPG, AVI, MKV) once per LiveSwitch session. It scans recordings in your directory and looks for completed sessions to combine.

Sessions are recordings of streams that use the same channel ID and application ID over the same time window. Channel and Application IDs can be found in your LiveSwitch Console dashboard.

Sessions are considered complete when:

1) there are no active streaming connections associated with that particular application and channel ID (the session is considered inactive) and,

2) there are no overlapping recordings. If a recording overlaps but it is active, the session is considered incomplete and will not be included.

When do you need to use a muxer?

When audio and video streams are received by the LiveSwitch Media Server, the raw streams are recorded and written to separate files by default. This process is the most efficient for the server: data is written onto the disk with as little overhead as possible, ensuring that all available Media Server resources are allocated towards maintaining the highest quality live streaming possible for the client(s).

In other words, the audio and video encodings retain their original encoding format. For example, when a broadcasting client sends Opus & VP8 encoded streams to the Media Server, the Media Server will write the broadcast media as Opus & VP8 into a Matroska container format that is then stored in the file system.

However, creating a combined single file containing all media tracks from a completed session for viewing post-streaming is a different challenge altogether. Using our example above, merging multiple Opus audio and VP8 video tracks into a container file such as an MP4 file for playback requires a multiplexer (muxer for short).

Video Multiplexing - Use Cases

Subtitles, Closed Captioning, and Webcast Presentations

Combining raw media tracks from a LiveSwitch hosted Media Server into a playable output file opens up a new dimension of live video streaming. Media tracks that our customers have asked to be multiplexed in the past include 1) closed captioning, 2) subtitles, and 3) screen shared presentations.

LiveSwitch Mux serves as an extremely effective tool for media track mixing. However, as the possibilities of video multiplexing are endless, developers may arrive at scenarios where advanced muxing solutions can be a better, optimal fit for their use case. In those circumstances, our professional developers can help you achieve the desired results.

LiveSwitch Mux serves as an extremely effective tool for media track mixing. However, as the possibilities of video multiplexing are endless, developers may arrive at scenarios where advanced muxing solutions can be a better, optimal fit for their use case. In those circumstances, our professional developers can help you achieve the desired results.

Overall, LiveSwitch Mux enables developers to create fully customizable recordings from their raw audio and video streams for post-session viewing. With LiveSwitch Mux, developers can ensure that broadcasters can conveniently share the streamed content from their platform to session participants to replay on their compatible devices, while maintaining control over the characteristics of the output file.

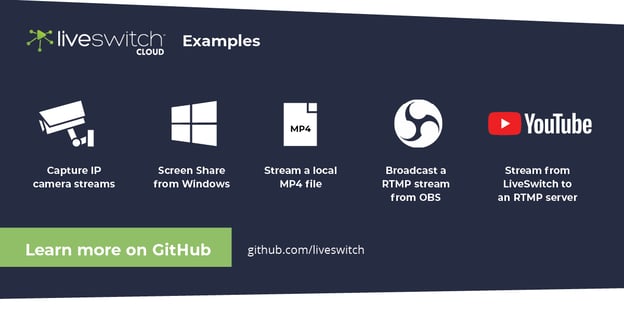

2. LiveSwitch Connect

Another open-source tool developed for LiveSwitch applications is LiveSwitch Connect. It can be used by developers to extend their WebRTC-based projects to capture media from non-standard sources (i.e. IP cameras, hardware capture cards, local media files, local screen capture, OBS studio) and also render and stream media out in other protocols (i.e. RTMP to YouTube).

3. LiveSwitch Connect: HLS Demo

An example of LiveSwitch Connect is the open-source LiveSwitch HLS demo that establishes HLS (HTTP LiveStreaming) with your LiveSwitch Cloud-based application.

What is HLS (HTTP Live Streaming)?

HLS (HTTP Live Streaming) is an adaptive bitrate streaming communications protocol originally developed by Apple for its iOS and macOS clients that was later extended to other platforms, browsers, and devices. HLS streaming can conveniently distribute video and audio streams across a content delivery network (CDN) and ensure that these streams are playable on virtually every device today. The wide-spread adoption of HLS streaming, despite its drawbacks and common criticisms about latency, has ensured its relevance in many broadcasting use cases.

HTTP streaming operates by splitting media recording into smaller chunks, measured in seconds, and transcoding them into a range of bitrates. The client's media player downloads these individual chunks over HTTP and, based on the client's network connectivity, will select the optimal bitrate for each chunk.

How Does HLS Streaming Work With LiveSwitch?

LiveSwitch HLS listens for new connections using LiveSwitch webhooks and converts the connections into conventional latency HLS streams that can be played/paused/rewound in most HTML5 video players. LiveSwitch HLS is a convenient, open-source tool that provides developers the ability to switch on HLS streaming as they need it.

This demo documentation provides LiveSwitch developers the instructions to establish HLS streaming within their application. It is a Visual Studio project built using Node.js and TypeScript. After entering your LiveSwitchConsole and creating an application level webhook to detect client connection events, you are able to connect the media sources to LiveSwitch Connect and LiveSwitch HLS for subsequent output to an HTML5 player. Additionally, if the application is hosted on LiveSwitch Server, simply update the GatewayURL in the app.ts file to point to your LiveSwitch Gateway and follow the demo documentation to make it happen.

4. Beta Client SDKs

Developers can benefit from early access to the upcoming minor releases of LiveSwitch Cloud on the platform of their choice. Accelerating platform development while being prepared for upcoming releases can be a game-changer for LiveSwitch developers.

Beta Clients are available for Android, .Net, UWP, Xamarin, macOS, Java, Unity, Web, iOS, and tvOS. These beta SDKs are best used on a test environment as opposed to a production environment and can be accessed easily from within the Cloud Console under “Downloads”.

Wrapping It Up

In the spirit of making LiveSwitch the most flexible live video platform & API out there, we have released a series of community developer tools. LiveSwitch Mux, LiveSwitch Connect, LiveSwitch HLS, and LiveSwitch Cloud Beta Client SDKs empower software developers to customize their LiveSwitch-powered applications beyond what is possible. For full, advanced customizations and project build outs that extend beyond the scope of these community tools, start the conversation with our Professional Services team to make your dream platform a reality.