End-to-end (E2E) encryption is a bit of a buzzword these days. Everyone wants it and every company is jumping into the ring to claim that they have it. It makes sense. Who doesn’t want an application that is completely unhackable? However, end-to-end encryption (especially for media within a browser) is extremely new and there are limitations that are often glossed over.

In this blog post, we will dive deep into what end-to-end video encryption is, how it works, how end-to-end encryption differs in native stacks and browsers, and the current limitations that no one is talking about.

What is End-to-End Encryption

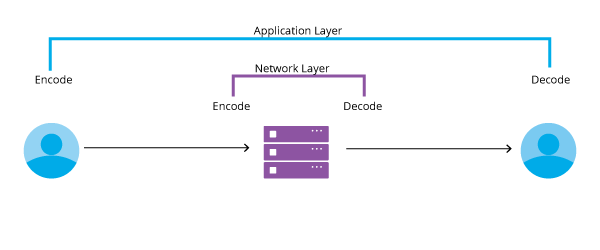

End-to-end (E2E) encryption in video conferencing is a way to secure data that prevents third-parties or intermediary servers (SFUs, TURN Servers, Gateway, etc…) from accessing or tampering with it at every hop along the media pipeline.

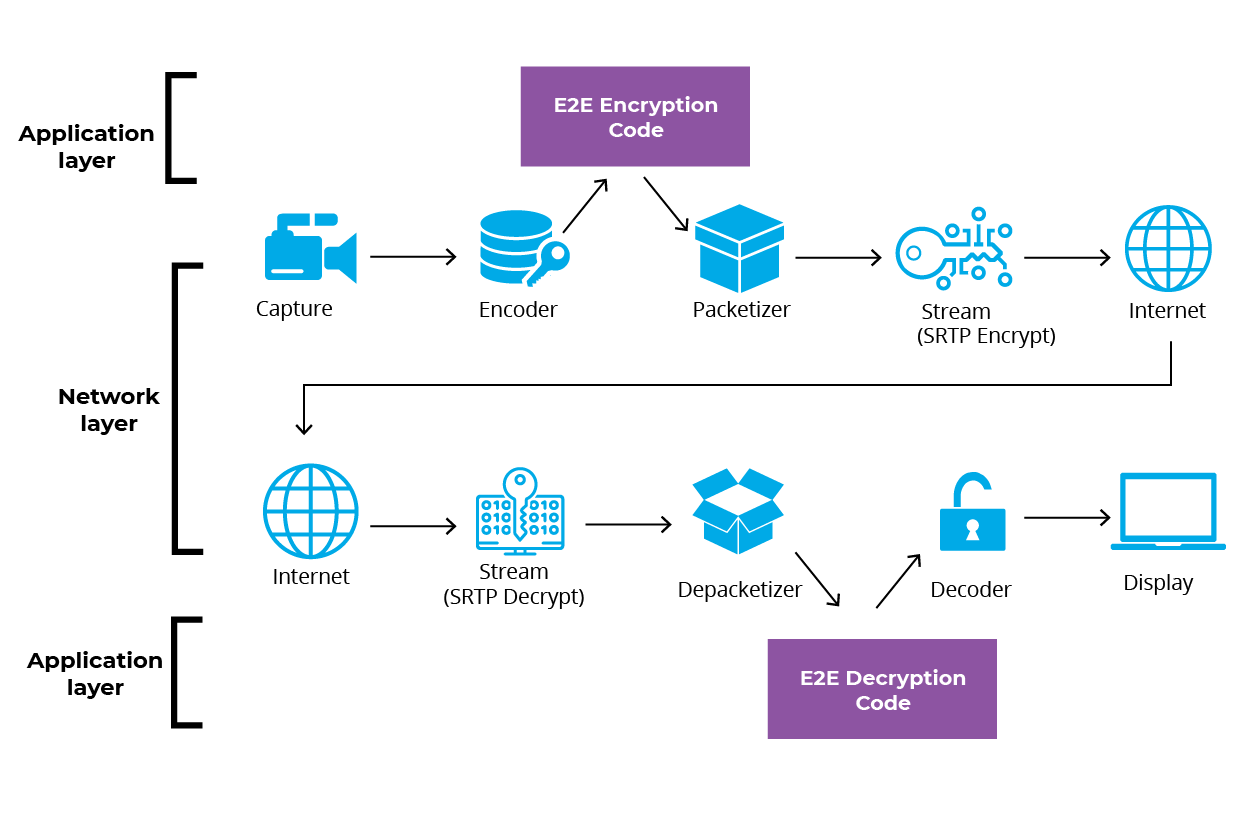

One easy way to think of true E2E encryption is as if all video data from the time it is captured by the camera to the time it is displayed on a screen is double encrypted. The content is encrypted first at the application layer and it is encrypted a second time at the network layer. The video platform provider generally takes care of the network layer, but the application developer is responsible for encrypting the application layer.

Due to its very nature, true end-to-end encryption can never be wholly supported out of the box in a SDK. In order to fully protect the data between the camera and all intermediary servers, the application developer has to be the one to encrypt the data at the application layer before it is even sent to the server and also decrypt it at an appropriate moment prior to display on the viewer’s screen. This means that developers using WebRTC SDKs like those offered by Frozen Mountain must make critical decisions about how and where they secure their applications. Fortunately for developers, Frozen Mountain’s SDKs are uniquely different from all the other SDKs out there because our media pipeline was designed specifically for access at every key point in the pipe between clients. This allows developers to build and encrypt their applications any way they want, like this customer did.

Due to its very nature, true end-to-end encryption can never be wholly supported out of the box in a SDK. In order to fully protect the data between the camera and all intermediary servers, the application developer has to be the one to encrypt the data at the application layer before it is even sent to the server and also decrypt it at an appropriate moment prior to display on the viewer’s screen. This means that developers using WebRTC SDKs like those offered by Frozen Mountain must make critical decisions about how and where they secure their applications. Fortunately for developers, Frozen Mountain’s SDKs are uniquely different from all the other SDKs out there because our media pipeline was designed specifically for access at every key point in the pipe between clients. This allows developers to build and encrypt their applications any way they want, like this customer did.

Network Encryption - The First Layer

Even without securing the application layer, WebRTC encryption at the network layer is very secure. Encryption is a mandatory part of WebRTC security architecture and is enforced on all aspects of establishing and maintaining a connection.

At Frozen Mountain we manage the network layer security so that you never have to worry about third parties accessing your content. Our products LiveSwitch Server and LiveSwitch Cloud are fully-encrypted on the network layer using the best methods in the industry. We have three different end-points that are exposed to the public internet that we secure:

- The Gateway

- Administration Console and Rest API

- The Media Server

Encrypting the Gateway and Administration Console

The Gateway and the Administration Console in LiveSwitch are standard web servers. Their traffic is encrypted using certificates and Transport Layer Security (TLS) encryption which is the standard for the web.

Transport Layer Security (TLS): Is a widely used cryptographic protocol that provides privacy and data security for communications and online transactions over the Internet. It is an IETF standard that is commonly used in web browsers, file transfer, VPN, email and voice over IP (VoIP).

It takes very little effort on the part of the developer to enable TLS encryption in LiveSwitch. Just find the certificate for your domain where you are hosting your application (ex.mydomain.com), upload that certificate to the LiveSwitch Administration Console, and then add an HTTPS binding to your deployment configuration.

Encrypting the Media Server

The LiveSwitch Media Server uses Datagram Transport Layer Security (DTLS) encryption to secure all media flow by default. No configuration is required. DTLS is the industry standard for media encryption in WebRTC communications.

Datagram Transport Layer Security (DTLS): is a protocol used to secure datagram-based communications. It is designed to protect delay-sensitive applications like streaming media. It is based on the TLS and provides a similar level of security.

Application Encryption - The Second Layer

In order to be considered end-to-end encrypted the application layer must also be encrypted. This means that you must first encrypt the messages yourself before sending them to the WebRTC server. This is accomplished in many different ways depending on the type of media that is being sent and whether you are using the native stack or a web browser. We will discuss this is detail below:

Native Stack vs. Browser

When discussing E2E encryption it is important to differentiate between E2E encryption for native apps and E2E encryption for web apps. When you see companies saying that they support E2E encryption, they are generally referring to E2E encryption in a native app. Frozen Mountain has supported E2E encryption in our native stack SDKs for many years, and are proud of being a leader in doing so. That said, as of today, performing true E2E encryption in browsers is still wholly dependent on the browser vendor's stack and is hence not possible to do securely at this time - with one exception. In May 2020, Google added an experimental API behind a flag in Chrome that could pave the way for broad E2E encryption support if adopted by other major browser vendors.

End-to-End Encryption - in the Native Stack

In order to have E2E encryption for your native stack, you need to encrypt on the application layer. This is accomplished in different ways depending on what type of message is being sent. Streaming media such as audio, video and data channel traffic need to be encrypted before it passes through the Media Server and chat messages need to be encrypted through the Gateway.

Let’s look at how Frozen Mountain tackles each of these individually:

End-to End Encryption of Messages and Chat in the Native Stack

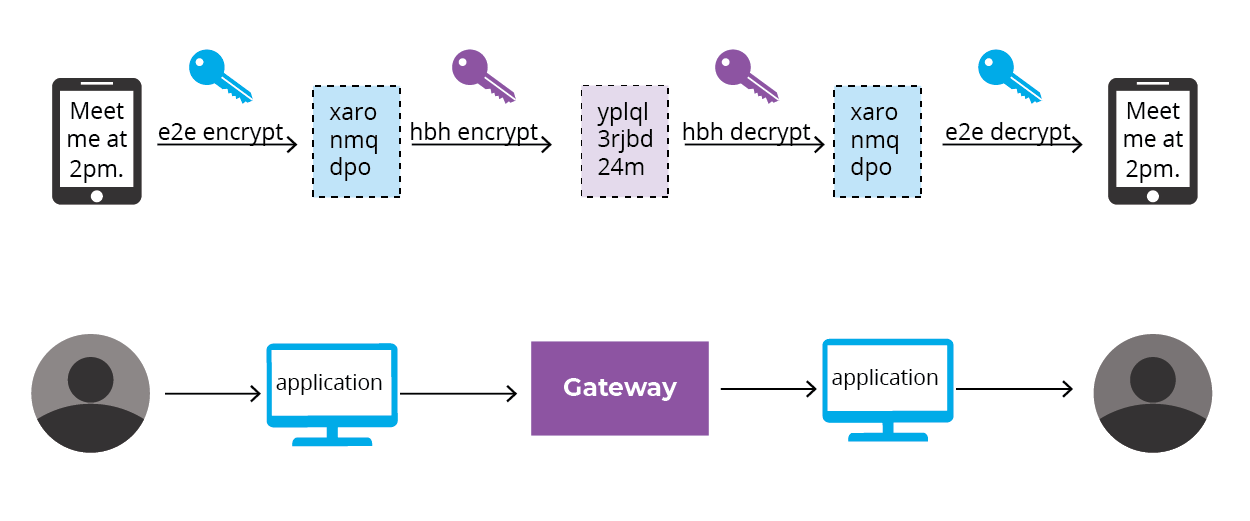

E2E encryption of text based messages is fairly straight forward within LiveSwitch and has been a feature since its inception. In order for any application layer message to be E2E encrypted, you must first have a way of generating and sharing keys with the participants outside of the LiveSwitch environment. There are a number of different ways to do that and LiveSwitch is compatible within them all.

The most straightforward method is to encrypt and decrypt every message using the same key. This allows anyone with the key to decrypt messages coming from anyone in the conference. Another method is to give each participant a unique key. This means that if I want to decrypt your content, I would need your key.

Regardless of how you choose to manage your keys, to be considered end-to-end encrypted the messages must be encrypted on the application side first before you call the LiveSwitch API to send the message to the Gateway. This means that the message that LiveSwitch receives will already be encrypted so even if LiveSwitch wanted to investigate what people were sending, it wouldn't be able to because it is just ciphertext. LiveSwitch will then encrypt the encrypted message one more time to ensure that your messages are always 100% secure.

The process would look like this:

End-to End Encryption of Data Channels in the Native Stack

Encrypting data channels at the application layer is very similar to encrypting text based messages. The main difference is that messages are typically sent through a Media Server data channel. In both cases, as far as LiveSwitch is concerned, we are just relaying data. LiveSwitch’s job is to shuffle that data back and forth regardless of its type or length.

End-to End Encryption of Audio in the Native Stack

LiveSwitch is compatible with end-to-end encryption for audio on our native stacks and has been for a very long time. If you want to end-to-end encrypt your audio streams, you first need to gain access to the audio frame/packet after compression in order to encrypt it before it is sent to the Media Server.

Due to the very flexible nature of LiveSwitch’s media pipeline, you can do this easily by attaching to the “OnProcessFrame” event of the outbound audio stream and encrypt it right there. It then goes out over the wire as gibberish, and on the remote side, you can access the frame using the “OnRaiseFrame” event of the inbound audio stream and decrypt it.

/* Create an audio source.*/

Protected override AudioSource CreateAudioSource(AudioConfig config)

{

// Try for DMO since it offers the best quality audio

var audioSource = new Dmo.VoiceCaptureSource(!AecDisabled);

//When the audio source captures a frame and raises it to the pipeline. audioSource.OnRaiseFrame += (audioFrame) => {

// Encrypt the Audio Frame before being encoded and sent.

audioFrame = EncryptionProvider.EncryptAudioFrame(audioFrame);

};

return audioSource;

}

End-to End Encryption of Video in the Native Stack

End-to-end video chat encryption is more complicated than audio. This is because compressed video frames often depend on other compressed video frames, while compressed audio frames can be decoded independently of each other.

A keyframe in a video stream acts as the foundation for subsequent frames. The keyframe is sent out first and is followed by “delta” frames that contain compressed information about the changes since the previous frame. LiveSwitch is optimized to ensure that the first frame you receive is a keyframe, but it can only do this if the frame header is not encrypted when it reaches the Media Server.

To make this possible, the first few bytes of the frame need to be left unencrypted. The exact number of bytes varies depending on which codec is being used (VP8, VP9, H.264). For example, with VP8, we need to leave 3-10 bytes unencrypted because those are the bytes that make up the frame header. Without access to the frame header, LiveSwitch can not reliably send video because it does not know what type of frame it is or where it comes in the sequence.

If (Format.IsVp8 || Format.IsVp9 || Format.IsH264)

{

var dataBuffer = DataBuffer;

If (dataBuffer == null)

{

// can’t be a keyframe if there is no data…

return false;

}

if (Format.IsPacketized)

{

// wrap and analyze payload

VideoFragment fragment = null;

if (Format.IsVp8)

{

fragment = new Vp8.Fragment(RtpHeader, dataBuffer);

If (!fragment.First)

{

return false;

}

}

else if (Format.IsVp9)

{

fragment = new Vp9.Fragment (RtpHeader, dataBuffer);

if (!fragment.First)

{

return false;

}

{

else if (Format.IsH264)

{

//TODO: this is a destructive operation and MUST NOT be

fragment = new H264.Fragment(RtpHeader, dataBuffer);

}

dataBuffer = fragment.Buffer

End-to-End Encryption within a Browser - The current state of affairs

While end-to-end encryption in the native stack has been around for a while, E2E encryption in a browser is a completely different story. Historically, web browsers have not provided developers any means modifying audio or video before sending it or playing it back, so E2E encryption in a browser was impossible.

Over the last few years there have been a couple of IETF drafts that have tried to create a standard to tackle the end-to-end encryption problem. The most promising were:

- Privacy Enhanced RTP Conferencing (PERC) - Draft has expired

- PERC Lite

- Frame Marking - Google Chrome has discontinued support

However, none of the proposed methods gained the traction or the browser buy-in that they needed to be usable in the real world. Support for these drafts have now been discontinued. This left everyone needing E2E encryption in the browser with no options.

Insertable Streams - a Promising but New Model

However, this may change soon with Chrome’s new experimental feature insertable streams, that was introduced on May 27, 2020.

Insertable Streams seeks to solve the problem of E2E encryption within a browser by allowing users to expose components in an RTCPeerConnection as a collection of streams, and then manipulate and introduce new components, or wrap or replace existing components. This allows the application developer the ability to rebuild the frames to include a small piece of unencrypted information in the header that is needed to reliably send the frames out over the wire, while ensuring all media is fully encrypted. This can be done on a per codec basis and can be tailored to meet the unique needs of each media server rather than a rigid industry standard.

Sounds promising, Right? It is, but the project is still experimental. This means that in order to enable it, you and your users will have to go into Chrome’s setting and turn on experimental features. This could be a barrier to entry for some user bases but for companies with strict IT guidelines and control, end-to-end encryption in its current form will soon be a possibility.

Wrap it Up

So where does that leave us? End-to-end encryption is a complicated topic. While E2E encryption in the native stack is well developed and has been possible for years, E2E encryption in the browser is brand new and at the early stages of experimentation. There is no company out there today that can honestly claim to have full support for E2E encryption in the browser because it is just too new.

The industry is just now at the precipice of E2E encryption in the browser. It is an exciting time and companies are racing to add it to their product line ups. However, this environment is changing fast and video platform providers will have to be able to change with them. At Frozen Mountain, we are consistently experimenting with and implementing proof-of-concept E2E encryption schemes. However, we are cognizant of the fact that we must remain flexible because the browser vendor’s APIs and direction could change at any moment.