Virtual Reality is a rapidly emerging technology that has the potential to change the way we interact and communicate with our world and with each other. With the global market for AR and VR expected to exceed $209 billion by 2022, it is no wonder that forward-thinking tech companies are starting to invest more in this up-and-coming technology.

Traditionally, much of the focus in virtual reality has been on creating visually immersive experiences that allow the brain to view the virtual world as if it is the real world. However, the visual challenge of virtual reality is only one component of the immersion experience. Audio plays just as important of a role. If the sound is out of sync with the visual effect it can be incongruous for the brain, disrupting the user’s immersion and overall experience. This is where 3D spatial audio comes in.

Spatial audio defined

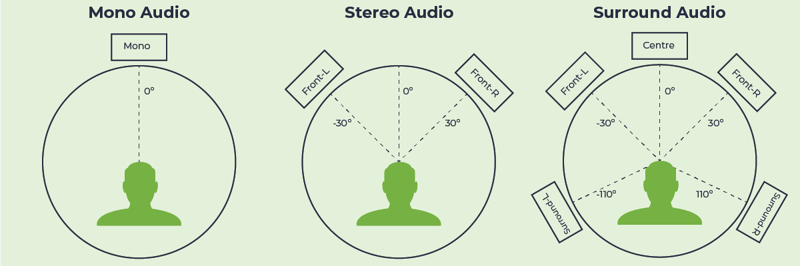

Although “spatial audio” is a bit of a buzzword in the virtual reality community, the concept of spatial audio has actually been around for a long time and encompasses a number of different audio types.At its most basic, spatial audio is simply any audio that is not mono (i.e. emanating from one channel). Therefore, stereo audio (i.e. audio recorded and mixed in separate left and right channels) could be considered spatial audio. Other forms of audio that could also be considered “spatial” in nature include surround sound and binaural audio.

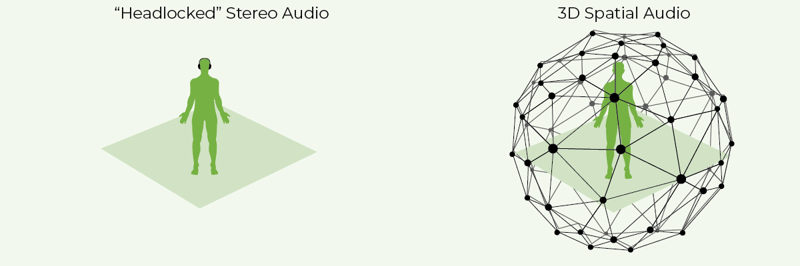

While these types of audio do increase the immersion a listener feels in a typical “head-locked” environment such as a movie theatre, it is insufficient for a virtual reality experience. This is because when you are in a virtual world, you move about and look around just as we do in the real world. If you hear a bird chirping in a tree to your left, you should hear that sound loudest through your left ear. But if you shift your head to look directly at the bird, the sound should become equally balanced in both ears. This is the challenge developers face when creating spatial audio for their VR applications. If audio levels and orientation do not change with a subtle change in head position it can profoundly impact the user experience.

3D Spatial audio defined

The goal of 3D spatial audio is to convincingly place sounds in a three-dimensional space so that the user perceives the sounds as coming from the real physical objects in their VR experience. Although in VR, you only physically see that which is directly in front of you, spatial audio can clue the user into what is taking place visually above, below, behind, and to the side of them.In spatial audio, unlike stereo audio, the sound is locked in space rather than to your head. This allows you to move around the room and the sound will remain locked spatially to your surroundings. In order to generate spatial audio, you must be able to combine audio with coordinate data that will alert the system to percept subtle head movements.

The LiveSwitch Advantage

Achieving 3D spatial audio is not easy. However, LiveSwitch offers two key advantages creating the 3D spatial audio effect in your VR application.Advantage #1: Low-level audio data access and conversion utilities

LiveSwitch allows application developers to access low-level audio data through the RTC audio pipeline. Developers can configure the audio format in the audio source so that the device can generate the data in the format they want. To manipulate or monitor the generated audio data, event handlers can be registered in each audio processing step, such as audio source, encoder, packetizer, depacketizer, decoder and audio sink. In addition, the sampling rate and the channel count can be easily changed using LiveSwitch's sound utilities to support most audio conversion use cases.

Need help creating spatial audio for your application? Click here to find out more about our professional services packages.

Advantage #2: WebRTC Data Channels to send coordinate data

In order to create the 3D spatial audio effect, you need to be able to combine audio (resampled into the correct format) with coordinate data from your user's head position and location in the virtual world. LiveSwitch's API has powerful data transmission capabilities that allow it to transfer any type of data including coordinates, mesh data (MPEG-4 FBA), depth, colour and metadata. Not only does LiveSwitch's API take advantage of WebRTC data channels, but it also contains the ability to broadcast those data channels over MCU and SFU connections - allowing you to greatly reduce bandwidth and latency in multi-party VR communications use cases.